环境oracle 11.2.0.4 2-nodes RAC on linux, 客户做了存储设备更换,像RAC 依赖的设备VD/OCR 通过增加NEW ASM DISKGROUP方式替换,做完以后当时RAC资源都正常,此日重启CRS服务发现crsd.bin进程一直失败,两个节点相同CRS无法启动,CSSD、GIPC\GPNP均启动正常,但ASM实例启动正常,DB手动启动也可以正常open, 后分析crs日志发现crs为启动后crash, 这里简单记录该问题。

CRSD启动失败的常见原因:

– ocssd.bin启动失败

– ASM 无法启动

– OCR 无法访问

– OLR 配置错误路径或两节点不一致

– gpnp profile网络配置错误(crsd.log show Shutdown CacheLocal. my hash ids don’t match’)

– $GRID_HOME/crs/init/.pid 文件不存在或被删除

– private network不通

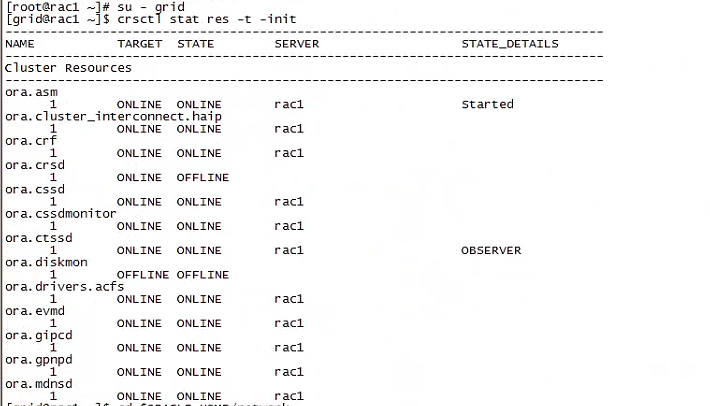

可是这个案例上面的可能都排除了,只有crsd当前是offline.

GI alert log

2023-02-26 21:13:24.904: [gpnpd(62897)]CRS-2328:GPNPD started on node rac1. 2023-02-26 21:13:27.250: [cssd(63016)]CRS-1713:CSSD daemon is started in clustered mode 2023-02-26 21:13:29.083: [ohasd(62747)]CRS-2767:Resource state recovery not attempted for 'ora.diskmon' as its target state is OFFLINE 2023-02-26 21:13:50.466: [cssd(63016)]CRS-1707:Lease acquisition for node rac1 number 1 completed 2023-02-26 21:13:51.709: [cssd(63016)]CRS-1605:CSSD voting file is online: /dev/mapper/STORAGE_ORA_OCR1; details in /u01/app/11.2.0/grid/log/rac1/cssd/ocssd.log. 2023-02-26 21:13:51.713: [cssd(63016)]CRS-1605:CSSD voting file is online: /dev/mapper/STORAGE_ORA_OCR3; details in /u01/app/11.2.0/grid/log/rac1/cssd/ocssd.log. 2023-02-26 21:13:51.725: [cssd(63016)]CRS-1605:CSSD voting file is online: /dev/mapper/STORAGE_ORA_OCR2; details in /u01/app/11.2.0/grid/log/rac1/cssd/ocssd.log. 2023-02-26 21:14:00.844: [cssd(63016)]CRS-1601:CSSD Reconfiguration complete. Active nodes are rac1 . 2023-02-26 21:14:03.257: [ctssd(64745)]CRS-2403:The Cluster Time Synchronization Service on host rac1 is in observer mode. 2023-02-26 21:14:03.514: [ctssd(64745)]CRS-2407:The new Cluster Time Synchronization Service reference node is host rac1. 2023-02-26 21:14:03.515: [ctssd(64745)]CRS-2401:The Cluster Time Synchronization Service started on host rac1. 2023-02-26 21:14:05.250: [ohasd(62747)]CRS-2767:Resource state recovery not attempted for 'ora.diskmon' as its target state is OFFLINE [client(64822)]CRS-10001:26-Feb-23 21:14 ACFS-9391: Checking for existing ADVM/ACFS installation. [client(64827)]CRS-10001:26-Feb-23 21:14 ACFS-9392: Validating ADVM/ACFS installation files for operating system. [client(64829)]CRS-10001:26-Feb-23 21:14 ACFS-9393: Verifying ASM Administrator setup. [client(64832)]CRS-10001:26-Feb-23 21:14 ACFS-9308: Loading installed ADVM/ACFS drivers. [client(64835)]CRS-10001:26-Feb-23 21:14 ACFS-9154: Loading 'oracleoks.ko' driver. [client(64868)]CRS-10001:26-Feb-23 21:14 ACFS-9154: Loading 'oracleadvm.ko' driver. [client(64920)]CRS-10001:26-Feb-23 21:14 ACFS-9154: Loading 'oracleacfs.ko' driver. [client(64992)]CRS-10001:26-Feb-23 21:14 ACFS-9327: Verifying ADVM/ACFS devices. [client(64997)]CRS-10001:26-Feb-23 21:14 ACFS-9156: Detecting control device '/dev/asm/.asm_ctl_spec'. [client(65001)]CRS-10001:26-Feb-23 21:14 ACFS-9156: Detecting control device '/dev/ofsctl'. [client(65006)]CRS-10001:26-Feb-23 21:14 ACFS-9322: completed 2023-02-26 21:14:27.136: [crsd(67060)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:27.304: [evmd(64766)]CRS-1401:EVMD started on node rac1. 2023-02-26 21:14:28.316: [crsd(67060)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:31.502: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:33.223: [crsd(67311)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:33.657: [crsd(67311)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:35.984: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:37.560: [crsd(68084)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:38.057: [crsd(68084)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:40.272: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:41.992: [crsd(68264)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:42.503: [crsd(68264)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:44.853: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:46.563: [crsd(68443)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:47.033: [crsd(68443)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:49.141: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:50.710: [crsd(68622)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:51.088: [crsd(68622)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:53.932: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:55.502: [crsd(68845)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:14:55.954: [crsd(68845)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:14:57.984: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:14:59.694: [crsd(69093)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:15:01.141: [crsd(69093)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:15:03.523: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:15:05.093: [crsd(69272)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:15:05.527: [crsd(69272)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:15:07.688: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:15:09.266: [crsd(69461)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:15:09.709: [crsd(69461)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:15:11.803: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:15:13.469: [crsd(69634)]CRS-1012:The OCR service started on node rac1. 2023-02-26 21:15:13.872: [crsd(69634)]CRS-1201:CRSD started on node rac1. 2023-02-26 21:15:17.125: [ohasd(62747)]CRS-2765:Resource 'ora.crsd' has failed on server 'rac1'. 2023-02-26 21:15:17.125: [ohasd(62747)]CRS-2771:Maximum restart attempts reached for resource 'ora.crsd'; will not restart. 2023-02-26 21:15:41.586: [cssd(63016)]CRS-1625:Node rac2, number 2, was shut down

Note:

可见ora.crsd是启动成功,但反复fail再restart,最后放弃。

CRSD LOG

2023-02-26 21:15:13.883: [ CRSCOMM][1472685856] IpcL: gipcListen() succeeded

2023-02-26 21:15:13.883: [CLSFRAME][1472685856] Tints initialized with nodeId: 1 procId: 14710174

2023-02-26 21:15:13.883: [CLSFRAME][1472685856] Starting thread model named: AgfwProxySrvTM

2023-02-26 21:15:13.883: [CLSFRAME][1472685856] Starting thread model named: OcrModuleTM

2023-02-26 21:15:13.883: [CLSFRAME][1472685856] Starting thread model named: PolicyEngineTM

2023-02-26 21:15:13.884: [CLSFRAME][1472685856] Starting thread model named: TimerSharedTM

2023-02-26 21:15:13.884: [CLSFRAME][1472685856] New Framework state: 3

2023-02-26 21:15:13.884: [ CRSRPT][626431744]{1:30336:2} Enabled

2023-02-26 21:15:13.884: [ CRSPE][628532992]{1:30336:2} PE Role|State Update: old role [INVALID] new [INVALID]; old state [Not yet initialized] new [Enabling: waiting for role]

2023-02-26 21:15:13.885: [ CRSSE][626431744]{1:30336:2} Master Change Event; New Master Node ID:1 This Node's ID:1

2023-02-26 21:15:13.885: [ CRSPE][628532992]{1:30336:2} PE Role|State Update: old role [INVALID] new [MASTER]; old state [Enabling: waiting for role] new [Configuring]

2023-02-26 21:15:13.885: [ CRSPE][628532992]{1:30336:2} PE MASTER NAME: rac1

2023-02-26 21:15:13.885: [ CRSPE][628532992]{1:30336:2} Starting to read configuration

2023-02-26 21:15:13.891: [ CRSPE][628532992]{1:30336:2} Reading (2) servers

2023-02-26 21:15:13.893: [ CRSPE][628532992]{1:30336:2} DM: set global config version to: 387

2023-02-26 21:15:13.893: [ CRSPE][628532992]{1:30336:2} DM: set pool freeze timeout to: 60000

2023-02-26 21:15:13.893: [ CRSPE][628532992]{1:30336:2} DM: Set event seq number to: 42300000

2023-02-26 21:15:13.893: [ CRSPE][628532992]{1:30336:2} DM: Set threshold event seq number to: 42380000

2023-02-26 21:15:13.893: [ CRSPE][628532992]{1:30336:2} Sent request to write event sequence number 42400000 to repository

2023-02-26 21:15:13.922: [ CRSPE][628532992]{1:30336:2} Wrote new event sequence to repository

2023-02-26 21:15:13.945: [ CRSPE][628532992]{1:30336:2} Reading (16) types

2023-02-26 21:15:13.952: [ CRSPE][628532992]{1:30336:2} Reading (4) server pools

2023-02-26 21:15:13.955: [ CRSPE][628532992]{1:30336:2} Reading (22) resources

2023-02-26 21:15:14.228: [ CRSPE][628532992]{1:30336:2} Finished reading configuration. Parsing...

2023-02-26 21:15:14.228: [ CRSPE][628532992]{1:30336:2} Parsing resource types...

2023-02-26 21:15:14.228: [ CRSD][628532992]{1:30336:2} Dump State Starting ...

2023-02-26 21:15:14.228: [ CRSD][628532992]{1:30336:2} State Dump for RTILock

2023-02-26 21:15:14.228: [ CRSPE][628532992]{1:30336:2} Dumping PE Data Model...:DM has [0 resources][0 types][0 servers][0 spools]

— NO ERROR

ohasd/orarootagent_root log

2023-02-26 21:15:08.705: [ora.crsd][1399535360]{0:11:17} [check] DaemonAgent::check returned 0

2023-02-26 21:15:08.706: [ AGFW][2038036224]{0:11:17} ora.crsd 1 1 state changed from: STARTING to: PARTIAL

2023-02-26 21:15:08.706: [ AGFW][2038036224]{0:11:17} Started implicit monitor for [ora.crsd 1 1] interval=30000 delay=30000

2023-02-26 21:15:08.706: [ AGFW][2038036224]{0:11:17} Agent sending last reply for: RESOURCE_START[ora.crsd 1 1] ID 4098:493

2023-02-26 21:15:11.635: [ USRTHRD][1401636608]{0:11:17} Thread:[DaemonCheck:crsd]Daemon Select Thread exiting

2023-02-26 21:15:11.635: [ USRTHRD][1401636608]{0:11:17} Thread:[DaemonCheck:crsd]Initiating a check action

2023-02-26 21:15:11.635: [ USRTHRD][1401636608]{0:11:17} Check action requested by agent etnry point for ora.crsd

2023-02-26 21:15:11.636: [ USRTHRD][1401636608]{0:11:17} Thread:[DaemonCheck:crsd]isRunning is reset to false here

2023-02-26 21:15:11.636: [ AGFW][2038036224]{0:11:17} Agent received the message: RESOURCE_PROBE[ora.crsd 1 1] ID 4097:272

2023-02-26 21:15:11.636: [ AGFW][2038036224]{0:11:17} Preparing CHECK command for: ora.crsd 1 1

2023-02-26 21:15:11.636: [ COMMCRS][2034026240]clscsendx: (0x7f8b1802d420) Physical connection (0x7f8b1802cd20) not active

[ clsdmc][2034026240]Failed to send meta message to connection [(ADDRESS=(PROTOCOL=ipc)(KEY=rac1DBG_CRSD))][11]

2023-02-26 21:15:11.637: [ora.crsd][2034026240]{0:11:17} [check] Error = error 11 encountered when sending messages to CRSD

2023-02-26 21:15:11.637: [ora.crsd][2034026240]{0:11:17} [check] Calling PID check for daemon

2023-02-26 21:15:11.637: [ora.crsd][2034026240]{0:11:17} [check] Process id 69461 translated to

2023-02-26 21:15:11.801: [ COMMCRS][1406367488]clsc_connect: (0x7f8b5800d830) no listener at (ADDRESS=(PROTOCOL=ipc)(KEY=rac1DBG_CRSD))

[ clsdmc][2034026240]Fail to connect (ADDRESS=(PROTOCOL=ipc)(KEY=rac1DBG_CRSD)) with status 9

2023-02-26 21:15:11.801: [ora.crsd][2034026240]{0:11:17} [check] Error = error 9 encountered when connecting to CRSD

2023-02-26 21:15:11.801: [ora.crsd][2034026240]{0:11:17} [check] DaemonAgent::check returned 1

2023-02-26 21:15:11.802: [ AGFW][2038036224]{0:11:17} ora.crsd 1 1 state changed from: PARTIAL to: OFFLINE

crsdOUT.log

当crsd进程crash时,会把call stack 写进out, 其它进程也一样,如agent,ocssd.bin

Dumping CRSD in-memory trace

CRSD Exiting in signalhandler

CRSD handling signal 6

CRSD Skipping dumps. Signal 6 count 2

2023-02-26 21:14:53

Changing directory to /u01/app/11.2.0/grid/log/rac1/crsd

2023-02-26 21:14:53

CRSD REBOOT

CRSD handling signal 11

Dumping CRSD state

Dumping CRSD stack trace

----- Call Stack Trace -----

calling call entry argument values in hex

location type point (? means dubious value)

-------------------- -------- -------------------- ----------------------------

sclssutl_sigdump()+ call kgdsdst() 000000000 ? 000000000 ?

492 7F5A8D303210 ? 7F5A8D3032E8 ?

7F5A8D307D90 ? 000000003 ?

sclssutl_signalhand call sclssutl_sigdump() 00000000B ? 000000000 ?

ler()+70 7F5A8D303210 ? 7F5A8D3032E8 ?

7F5A8D307D90 ? 000000003 ?

__sighandler() call sclssutl_signalhand 00000000B ? 000000000 ?

ler() 7F5A8D303210 ? 7F5A8D3032E8 ?

7F5A8D307D90 ? 000000003 ?

_ZNSs6assignERKSs() signal __sighandler() 7F5A8D308CB8 ? 7F5A0000D7D8 ?

+19 31EB6F32D8 ? 000000000 ?

00000000E ? 7F5A8D3091D0 ?

_ZN3cls15StringToke call _ZNSs6assignERKSs() 7F5A8D308CB8 ? 7F5A0000D7D8 ?

nizerC9ERKSsS2_b()+ 31EB6F32D8 ? 000000000 ?

139 00000000E ? 7F5A8D3091D0 ?

_ZN3cls15StringToke call _ZN3cls15StringToke 7F5A8D308CB0 ? 7F5A0000D7D8 ?

nizerC1ERKSsS2_b()+ nizerC9ERKSsS2_b() 00138F1E8 ? 000000000 ?

59 00000000E ? 7F5A8D3091D0 ?

_ZN3cls15StringToke call _ZN3cls15StringToke 7F5A8D308CB0 ? 7F5A0000D7D8 ?

nizer8toVectorERSt6 nizerC1ERKSsS2_b() 00138F1E8 ? 000000000 ?

vectorISsSaISsEERKS 00000000E ? 7F5A8D3091D0 ?

sS6_()+71

_ZN6cls_pe12Resourc call _ZN3cls15StringToke 7F5A8D3090D8 ? 7F5A0000D7D8 ?

eType25reconstructF nizer8toVectorERSt6 00138F1E8 ? 000000000 ?

romRepositoryERSt3s vectorISsSaISsEERKS 00000000E ? 7F5A8D3091D0 ?

etIPS0_St4lessIS2_E sS6_()

SaIS2_EERKSt3mapISs

S8_ISsSsS3_ISsESaIS

t4pairIKSsSsEEES9_S

aISA_ISB_SE_EEE()+7

86

_ZN6cls_pe18PolicyE call _ZN6cls_pe12Resourc 7F5A8D309940 ? 7F5A00006CD0 ?

ngineModule33report eType25reconstructF 00138F1E8 ? 000000000 ?

ConfigReadVThreadCo romRepositoryERSt3s 00000000E ? 7F5A8D3091D0 ?

mpletionEPNS0_17Rea etIPS0_St4lessIS2_E

dConfigContextE()+5 SaIS2_EERKSt3mapISs

92 S8_ISsSsS3_ISsESaIS

t4pairIKSsSsEEES9_S

aISA_ISB_SE_EEE()

_ZN6cls_pe18PolicyE call _ZN6cls_pe18PolicyE 001BE7360 ? 7F5A00006CA0 ?

ngineModule26readTy ngineModule33report 00138F1E8 ? 000000000 ?

peAttributesCallBac ConfigReadVThreadCo 00000000E ? 7F5A8D3091D0 ?

kEPN7cls_ocr16Multi mpletionEPNS0_17Rea

SubKeyFetchE()+1206 dConfigContextE()

_ZN3cls11ThreadMode call _ZN6cls_pe18PolicyE 001BE7360 ? 7F5A00016820 ?

l12processQueueEP7s ngineModule26readTy 00138F1E8 ? 000000000 ?

ltstid()+3632 peAttributesCallBac 00000000E ? 7F5A8D3091D0 ?

kEPN7cls_ocr16Multi

SubKeyFetchE()

_ZN3cls11ThreadMode call _ZN3cls11ThreadMode 001B5B650 ? 7F5A8D30AC30 ?

l5runTMEPv()+140 l12processQueueEP7s 00138F1E8 ? 000000000 ?

ltstid() 00000000E ? 7F5A8D3091D0 ?

_ZN13CLS_Threading1 call _ZN3cls11ThreadMode 001B5B650 ? 7F5A8D30AC30 ?

3CLSthreadMain8cppS l5runTMEPv() 00138F1E8 ? 000000000 ?

tartEPv()+73 00000000E ? 7F5A8D3091D0 ?

start_thread()+209 call _ZN13CLS_Threading1 001C46FC0 ? 7F5A8D30AC30 ?

3CLSthreadMain8cppS 00138F1E8 ? 000000000 ?

tartEPv() 00000000E ? 7F5A8D3091D0 ?

clone()+109 call start_thread() 7F5A8D30B700 ? 7F5A8D30AC30 ?

Note:

oops! 看不懂的call stack调用,应该是OS层的调用。

解决方法

Deconfig/Reconfig 分别在两个节点操作,不会重建OCR,只是重新配置集群信息。

# root user # <$GRID_HOME>/crs/install/rootcrs.pl -deconfig -force # <$GRID_HOME>/root.sh